How Arize Phoenix Works

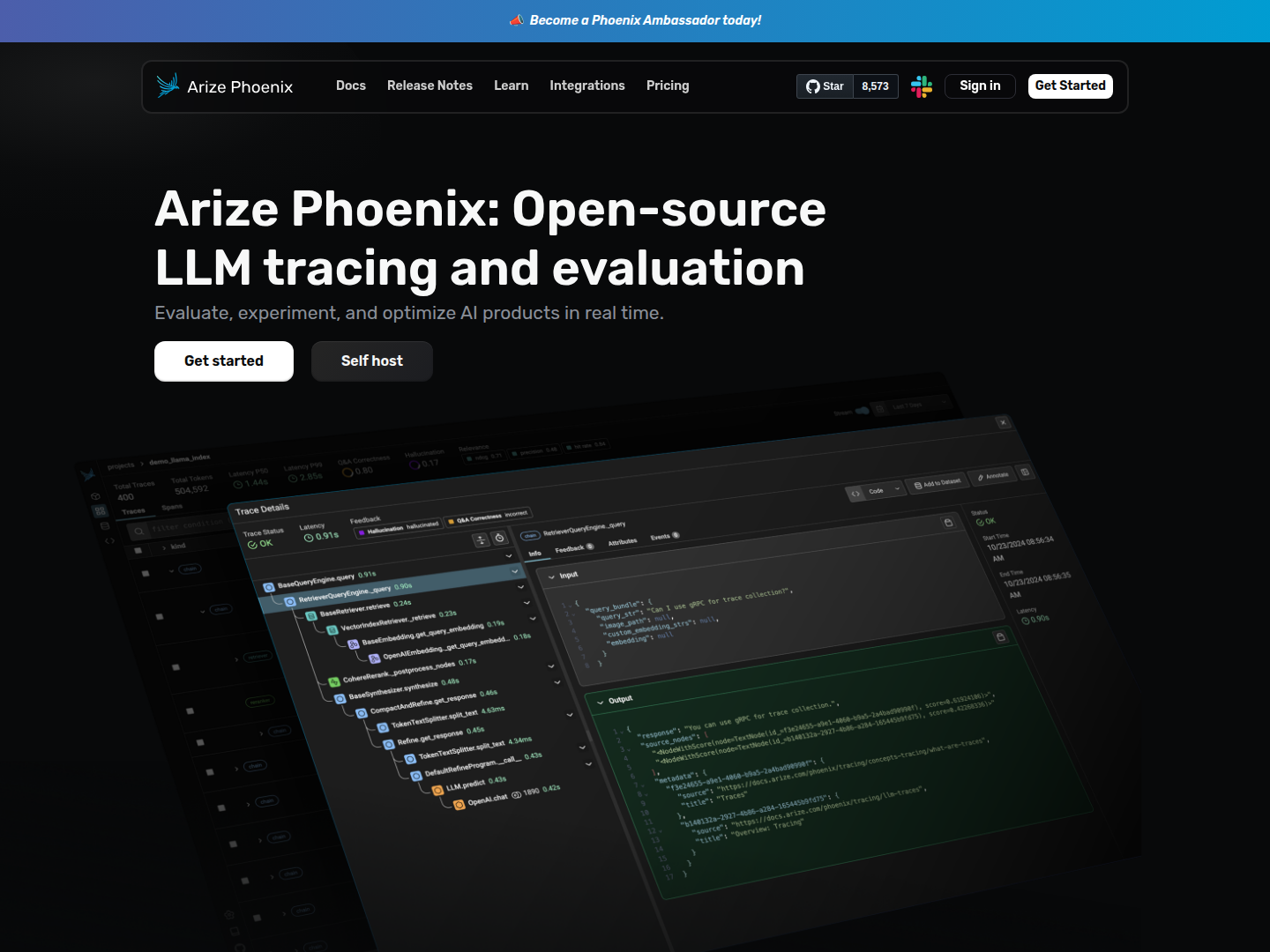

Arize Phoenix is built on top of OpenTelemetry (OTEL), providing a flexible and transparent framework for LLM observability. It offers seamless integration to collect LLM application data through automatic instrumentation, with options for manual control for advanced users. A core feature is its Interactive Prompt Playground, which serves as a fast, flexible sandbox for iterating on prompts and models, allowing users to compare outputs and debug failures directly within their workflow.

For evaluations, Phoenix provides a streamlined library with unparalleled speed and ergonomics. It includes pre-built templates that can be customized for any task and easily incorporates human feedback. Furthermore, its Dataset Clustering & Visualization capabilities utilize embeddings to uncover semantically similar questions, document chunks, and responses, which is crucial for isolating and addressing poor performance within large datasets.

Why Use Arize Phoenix

Arize Phoenix offers significant advantages for anyone developing or deploying LLM-powered applications. As an open-source, self-hostable solution, it provides complete control without feature gates or restrictions, eliminating vendor lock-in concerns. This allows teams to integrate it seamlessly into their existing data science workflows and infrastructure.

The tool's application tracing provides total visibility into LLM decision-making, helping to debug and troubleshoot complex issues like hallucination, problematic responses, and unexpected user inputs. By visualizing LLM processes and flagging failures, Phoenix accelerates the fine-tuning process. Its robust evaluation and annotation features, combined with dataset clustering, enable developers to identify root causes of performance issues and drive continuous improvement, ensuring more reliable and performant AI products.